SOCIAL & COMMUNITY-BASED MEASURES

Just as the state, traditional media and civil society must address atrocity speech at higher levels, so too must individuals on the ground. For them, social media has become a regular way of communicating broadly – and as a result, people need to be alert to the potential atrocity speech consequences of their social media communications. This section addresses how social media platforms and users can be attuned to those potential consequences, beginning with the legal realm in which social media operates.

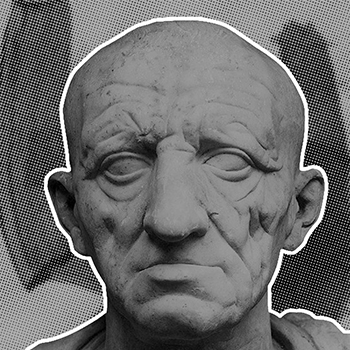

[H]ate speech is both a calculated affront to the dignity of vulnerable members of society and a calculated assault on the public good of inclusiveness.

– Jeremy Waldron, The Harm in Hate Speech (2012), pp. 5-6

New ISIS Video Highlights What Child Soldiers Go Through

This video demonstrates how ISIS attempts to indoctrinate youth through online hate speech, including instructions to kill Yazidis. Source video uploaded to Yahoo News, reported by Beth Greenfield.

What Happened Next After a Facebook Post Caused Tensions in Ethiopia? | Edith Kimani in Ethiopia

In the video above, one well-known incident chronicled by the media outlet Vice described the aftermath of the murder of an Oromo activist in Ethiopia in 2019: “Social media users were quick to assert, inaccurately and without evidence, that the murder was committed by a ‘neftegna’ – an increasingly problematic term that has become a dogwhistle call to demonize and attack Amhara people in parts of Oromia.” There was “almost-instant and widespread sharing of hate speech and incitement to violence on Facebook.” Mob violence led to at least 166 deaths and, according to Vice, perpetrators “lynched, beheaded, and dismembered their victims.” The incident was not the only of its kind, though, as the video here shows. Source video uploaded to YouTube by DW the 77 Percent.

‘Cada vez mais, o índio é um ser humano igual a nós’, Diz Bolsonaro em Transmissão nas Redes Sociais

In this video, a Brazilian news segment addresses Bolsonaro’s degrading, dehumanizing comments towards the indigenous people of Brazil’s Amazon region. Source video uploaded to Globo.

What should be the response?

-

Governments

In order to appreciate how companies and individuals can exercise responsibility online as it relates to incendiary speech, we first need to understand the legal framework in which social media operates.

The internet famously developed in the absence of much government regulation. Governments have recently made efforts to exert greater control, but often primarily in the interest of protecting privacy – most prominently in the European Union's "General Data Protection Regulation" (GDPR) – or in the form or outright censorship, including extended shutdowns of the entire internet during times of conflict, as the organization Netblocks has documented (for example in Ethiopia in November 2020 and Myanmar in February 2021 ).

National governments can play a much larger role than they generally do in addressing atrocity speech. Most importantly, of course, they can refrain from promulgating atrocity speech themselves. In addition, they can have laws and policies delineating guidelines for online speech (as is the case in Germany, where a law prohibits neo-Nazi rhetoric and an update called the Netzwerkdurchsetzungsgesetz (NetzDG) extends those limits to social media and the internet). They can provide incentives for companies to crack down on incitement and instigation, such as a French bill that became law in 2020 that requires social media networks to remove hate speech posts within 24 hours of their appearance.

International criminal law has developed in advance of (or with insufficient attention to) the internet and social media. Jurists and policymakers should recognize that online communication plays a crucial role in fomenting atrocities, and should establish clear guidelines not only regarding the admissibility of online evidence, but also in respect of the responsibility for online speech crimes.

-

Social Media Companies

Having examined the legal framework, let us now consider how corporations can operate responsibly with respect to regulating inflammatory rhetoric and images. This may be exercised by a number of means, including:

- Identifying the prospect that one’s product (such as a social media platform) might be used by some actors to persecute or incite violence against a minority group;

- Exercising a duty of care, under the banner of corporate social responsibility, to withhold introduction of a product that could be used to amplify atrocity speech, even if doing so might reduce profits in the short run;

- Cooperating with courts and other transitional justice mechanisms to ensure access to atrocity speech expressed on corporate platforms – whether social media or comments sections;

- Commit to community standards in moderating content and respecting privacy via a consensus approach to defining and detecting atrocity speech, as follows.

Community Standards

United States-based social media companies (or SMCs) tend to share two sometimes-contradictory commitments. On one hand, they generally follow principle of hewing to “community standards,” limiting what users can post in terms of content that is abusive or threatening, violent, pornographic, or illegal (such as copyright violations).

On the other hand, Section 230 of the Communications Decency Act (CDA) protects SMCs from criminal liability that might otherwise be alleged in connection with content posted on their respective services. In other words, this social media policy is quite speech-friendly when compared to other jurisdictions, such as the European Union, whose regulations are far more restrictive and punitive vis-à-vis inflammatory rhetoric.

Defining Atrocity Speech

In the name of “community standards,” SMCs must moderate content that might amount to atrocity speech. However, this may be easier said than done.

PROBLEM

It may sometimes be difficult to identify the line between legitimate (if ugly) political speech and illegitimate atrocity-related speech. Yet this tension always exists. It would be a mistake to cede all ability to address speech that may violate international criminal law simply because it is not easy to do so.

SOLUTION

SMCs, either individually or through an industry-level consortium, should establish clear principles regarding what will be classified as non-permissible atrocity speech. Such principles are delineated in 'Atrocity Speech Law: Foundation, Fragmentation, Fruition'.

Section 230 of the CDA should not shield social media companies from liability for disseminating atrocity speech. A carve-out, such as those that already exist for child-trafficking and copyright violations, is needed in order to ensure that SMCs to comply their responsibility to detect and take down atrocity speech.

Detecting Atrocity Speech

PROBLEM

Atrocity speech online is often hard to detect. Defining atrocity speech is one thing, finding it may be yet another. Most SMCs' have some efforts under way already:

- Having robust user-complaint systems cover posts involving problematic content, like abuse or threats.

- Algorithms detect standards-violating content and are often supplemented with artificial intelligence (AI) that “learns” what new forms of harmful content entail even before human engineers can identify it and adjust the code as needed.

SOLUTION

In addition to measures already in place, SMCs must address three important issues regarding Algorithms and AI:

- First, the automated systems must be able to read the script of the communication at issue. This issue has posed a challenge in various human rights hot spots such as Myanmar, Ethiopia, and parts of India.

- Second, not all atrocity speech is communicated through words alone. Images, GIFs, or memes can constitute forms of communication, which, on their own or when combined with words and/or other images, constitute atrocity speech. Non-human detection systems need to learn how to spot such criminal communications.

- Third, it is often the context of the speech, not just in terms of nonverbal elements but also political meanings (known as “dog-whistles”) embedded in cultural conversations, that gives a post meaning. A robust human component to SMCs automated systems is essential in order to detect such content.

-

The Role of Individuals

We have seen how governments must establish an appropriate legal framework to prevent atrocity speech online and how corporations must promulgate protocols and standards within that framework. Now we should consider how individual users can responsibly engage with the communications ecosystem that this framework creates. This includes:

- Refrain from uttering or amplifying atrocity speech. Most of us lack the intent – and generally the means – to broadcast vile content that might contribute to commission of mass atrocities. However, we all may encounter such speech in social media (and other communications environments). It is important to recognize such speech and the dangers it might portend. Posting one’s own comments affirming such speech, as well as reposting, liking, retweeting or otherwise amplifying it, only serves to extend those dangers. We must refrain from doing so.

- Employing User-Initiated Safeguards. Most platforms have both community standards that prohibit atrocity speech and provide means to report it when it occurs (after which platform moderators will consider whether the post should be removed and, in some cases whether the author of the post should be banned, temporarily or permanently, from the platform.) Such user-initiated safeguards are a valuable tool, but to be effective, require the willingness to use them.

- For online communications, this should not be left merely to reactive options. Everyone can contribute to a more productive and positive online environment by creating and spreading norms of tolerance in society. When values of respect and appreciation for other groups gain greater favor, division diminishes (along with related persecution/targeted violence).